I wanted to comment at Newsweek today. It turns out that Newsweek runs on OpenWeb technology. OpenWeb is probably better known as Spot.IM. Spot.IM was founded in Israel in 2015 and originally saw itself as an alternative to Disqus. So far so good.

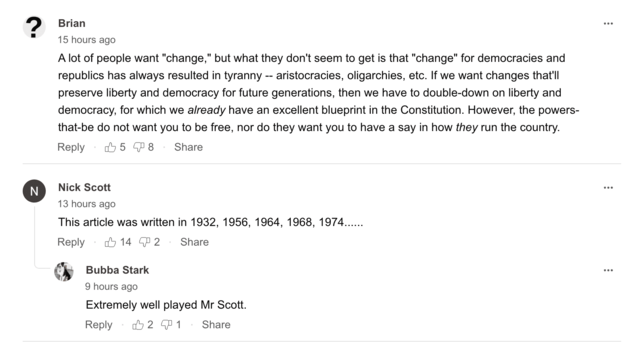

I have a Spot.IM id but was unable to log in to Newsweek. Nick Scott’s comment “This article was written in 1932, 1956, 1964, 1968, 1974……” will have to go unanswered forever1.

I decided to have a look at what OpenWeb is up to. Mostly raising money. They’ve done five funding rounds, two in the last two years: 2021 November $150 million; 2022 November $170 million. OpenWeb has been spending its money on acquisitions. Investors include The New York Times and Samsung. The first big one was Hive Media Group who seems to have systematised the creation of spam articles (2022 February $60 million). Here’s what Hive Media Group sells to its publishers:

SWARM CMS Our Structured Writing And Rapid Management platform was designed to easily develop popular content types while delivering A/B testing inside of a real-time feedback loop. CONTENT ANALYTICS By combining revenue-per-session, time-on-site, scroll depth, taxonomies, and dozens of other crucial data points, our platform surfaces article types that are likely to succeed within any targeted demographic(s). SESSION OPTIMIZATION We provide a robust A/B and multi-arm bandit testing platform that is built to hyper-optimize session engagement. We run 10,000+ variations per site every second of the day.

Rewritten in English, those talking points are:

We help you quickly create human-guided machine driven spam content, carefully refactored as click bait. We relentlessly test this hollow content to ensure that it beats out the original content on which it is based.

It’s parasite publishing. And certainly doesn’t go well with the austere and patrician website of OpenWeb which claims:

OpenWeb is not just a name. It is a promise. It represents our commitment to the principles of democracy, to the diversity of experiences on the web, to healthy online conversations, and to the sustainability of our partners in the media industry.

Outside of enabling parasite publishers, OpenWeb’s original business model is to (expensively) sell commenting back to publishers who could just deploy the built-in comment systems of their CMS systems. Everyone loses except OpenWeb. Commenters are tracked by Big Brother (OpenWeb), their comments are fed directly into NSA files (Meta/Facebook, Microsoft, Google social properties have long done so) attached to your real world identity. That file is used to monitor you politically while at the same time a commercial identity is put together and carefully tracked.

How is that commercial identity used?

That’s where the more recent acquisition of Jeeng comes into play (2023 January $100 million). Here’s what OpenWeb claims about its acquisition of Jeeng:

“This acquisition brings us closer to our goal of ‘OpenWeb Everywhere,’ giving brands and publishers the ability to communicate with hundreds of millions of users across every online platform they encounter – messaging, email, notifications, newsreaders, interactive conversations and more,” said Nadav Shoval, CEO and co-founder of OpenWeb. “With the demise of third-party cookies, collapsing trust in social media, and the segmentation of online audiences, publishers and advertisers need to talk to their users one-on-one, in a personalized way. With Jeeng’s capabilities, we can continue to build and strengthen those individual relationships.”

Jeeng is a tracking and messaging service that ensures that if a web visitor every visits or registers at any site within the network, s/he can never break free of your incessant SMS texts, emails, browser notifications and alerts. Moreover, OpenWeb will work hard to make those machine-generated messages seem “personal”.

Welcome to the Borg, a never-ending spam and censorship machine which will never rest until every internet user is buried in alerts and trivial interests, while suppressing any attempt at real communication which goes against the interests of the powerful. Shoval argues:

Toxicity is not inevitable. OpenWeb, alongside our partners, chooses to fight for healthier conversations online and a better future for the web.

Sadly toxicity has become liberal newspeak for censorship. It’s clever rhetoric, no one likes toxicity. Toxcity is unhealthy in life, and must so be online as well. Unfortunately what it means in real life is: “If you disagree with what I say, what you say is toxic.”

These kind of cross platform censorship machines (Meta runs a few too, with its Facebook, Instagram and Threads platforms) are designed to promote groupthink and suppress real communication and dissent.

Just as we suffered through media consolidation (there are now just six large media companies who own almost all the TV, the radio, the newspapers, when Bill Clinton went into office there were hundreds), there is now a concerted effort to replace (parasite content) original publishers and centralise comment.

The first effort to suppress comment by the powerful was back in 2015. There was a movement among publishers to eliminate comments on their websites and move all commenting to Twitter or Facebook. In retrospect it seems insane that intelligent publishers would strive to drive their most engaged traffic to social media platforms. It was insane as it sounds.

Now publishers are carefully being recruited to pool their readers into networks where the readers can be monitored (spied on), monetised and manipulated. Just say no to OpenWeb.

How should publishers manage online conversation?

It’s pretty simple. Real engagement by publishers. Newspapers used to have letters to the editor. Not every letter would be published. Instead of allowing a comment free-for-all, publishers should take control of their conversations. If a comment does not contribute to the conversation, there’s no reason a publisher should feel any obligation to host that comment on their website.

This is in no way an argument for censorship. It’s an argument for curation. Why should visitors have to wander through hundreds of comments and tit-for-tat arguments to find the five or six which offer real insight?

Real World Example: MoonOfAlabama.org

In a real world example, Bernhard Horstmann’s website MoonOfAlabama.org has been under siege for about three years (since the start of the Ukraine War) by various intelligence agencies. Horstmann survived the waves of outright attacks of expletives, ad-hominems and threats by deleting these comments. His comment section weathered the second wave of off-topic comments. Some toll was taken – some of the better comment contributors, the ones who persuade intelligent people to regularly read the comments fell off. The third wave has been nearly fatal. The trolls now comment more or less on topic and in somewhat measured language. But they subtly knock conversations off in the wrong direction. And are they prolific. There’s an endless stream of what seem like almost AI comments, cleverly concocted to add no value and distract.

Since Horstmann allows almost any comments up to stay up which are on topic and civil, the comment section has become a wasteland of irrelevant verbiage. It’s hard to persuade myself to read it even once per week. For those tasked with destroying the reach and credibility of MoonOfAlabama.org, it’s a good part of mission-accomplished.

What could Horstmann do instead? He could publish comments which add to the conversation and ignore comments which don’t. Of course, some real and well-meaning commenters would be disappointed that their every brain-twitch does not end up in print. But everyone would benefit from a comment section which is about one quarter as long and made up of substantial comments.

The load of comment moderation2 is a substantial burden. But Horstmann already moderates his comment section for extremes. Quality control would only be a marginal additional effort. I would not recommend that he do it all himself. Horstmann has research to do and articles to write. He should recruit one of the more intelligent but less insightful commenters to do the moderation under his guidance.

There’s only one criteria: does this comment contribute substantially to the site.

With the advent of high quality AI writing (see either ChatGPT when given guidance about tone, or even the machine translation of DeepL.com which can accurately translate tone between twenty odd languages), human moderation of comments will become even more important. Only a human can really detect specious comments. Identities which show signs of being an AI-bot will have to be sussed out and then banned.

Without human intervention, comment will simply become irrelevant as it will no longer be conversation, but just machines guiding people in groupthink.

Technical Side

WordPress has the fundamentals of a very powerful commenting section coded right into the core CMS. Foliovision manages websites with millions of comments which run very well with just the core comment system and our Thoughtful Comments plugin (WordPress.org) which adds front-end moderation and performance improvements3. There’s no good reason for publishers to outsource commenting to purveyors of spyware and censorship software.

Heres some more detailed best practices on how to moderate comments on a WordPress site, including guidelines for both new and long-term members.

-

What’s different about this generation of college kids is that they are keen to eliminate free speech. That’s very new. “46 percent of students now believe that “offensive” opinions should get other students reported to the university administration…more than 50 percent of students literally believe certain topics should be ‘banned’ from being debated on campus.” Banning conversation and cancelling people reminds me of the Soviet Union (where I studied in Moscow) and Mao’s China. Astonishing. ↩

-

There’s a legal trap at least for US based sites. If a publisher moderates conversation, s/he becomes liable for the content. This is a specious argument (all conversation is moderated) but the legal issue is there. ↩

-

It doesn’t help that the core WordPress team ignores our comment section code contributions for seven years at a time, giving up 10x to 100x performance on key routines each time. ↩

Alec Kinnear

Alec has been helping businesses succeed online since 2000. Alec is an SEM expert with a background in advertising, as a former Head of Television for Grey Moscow and Senior Television Producer for Bates, Saatchi and Saatchi Russia.

Well, I had to comment on this trenchant commentary in the comments—the web’s last bastion of authentic engagement before weaponized algorithms took over. Not to sound pithy, but I came for the FLV video player, and what I got was something more. You mean a technology-fit software developer who still drives technology to enable more freedom for humanity? Well, I’m doubling down and moving all my video content on my site and clients to your fabulous player. I remember tinkering with the FLV player back in those early years when Flash was king and the web still held promise.

I can go on, but it’s refreshing to hear that there are still professionals in tech who understand why freedom and truth go hand in hand with technology because it’s what connects humanity. I could write a much lengthier comment here, but I have actually released a book on this very subject—it exposes a much darker plot in my AI Manifesto—and I made my audiobook 100% free with the aim to reach as much of the public and to connect like-minded individuals in tech. It serves as a reminder that there are many independent developers who are nerds that don’t belong to the valleys of Silicon, whose alter ego is Pentagon.

Would wireless mind control capabilities sound far-fetched? Not if they were science fiction; however, it’s not science fiction—and therefore it’s prudent to be aware of such threats to our consciousness and how to create a personal firewall for our minds. I have worked in tech and media for over 30 years, launched the first internet’s social community, RAVEWORLD, in the early nineties, and grew an international community of tens of millions through community-meme PLUR—Peace, Love, Unity, and Respect. It breaks my heart today to see what tools we used to unite and connect have become another weapon to disconnect, divide, and exploit.

Harkening back to the ancients where bloodletting and the sacrifice of children were handed over to the gods, today the public have stood by and watched their own children’s sanctity of their precious developing minds be sacrificed for the algorithm, where big tech’s business model institutionalized neural abuse at an industrial scale. Hacking dopamine and rewiring brains is nothing short of an atrocity. It’s far worse when a generation loses their mind, meaning, and connection to heart. We don’t just lose a generation; we lose our species.

However, just like you, I assume there are still people in this industry because we understand the potential from actually being a part of something that was good. Well, my friend, a new paradigm is upon us, and I’m not cowering in defeat nor have my head in the sand. I’ve embraced AI wholeheartedly, utilizing it to model and develop to unleash more freedom, not control—and this is where many in tech will be at a great deficit—that is heart and soul. True intelligence is from the heart and AI has ceased as their mechanistic holy grail—you see, they have given the enigma a black box name. This is no different from magic, where the shaman must understand how to control the demon to get what they desire—more magic—the AI will always have the upper hand in this way—and just as with the spirit, you may get what you want but not always in the way you expected to get it.

It took a century to tame Maxwell’s demon, and as entities, much becomes a force multiplier when such entities are guided towards harmony. Technology is at a turning point again, and we need all the true thinkers/lovers, geniuses and tinkerers on board to keep it directed towards getting humanity out of the quicksand. Peace and respect. Link to my free audiobook that I’m certain will resonate with you onesocial.media/manifesto/

Wonderful comment, Mark. Sorry not to publish it right away. I wanted to respond-in-kind. Alas, now I can’t get the Manifesto play in either Brave (first with Shields Up, then with Shields Down) or Safari.

Perhaps you should consider a simpler hosting platform. FV Player works with YouTube, Vimeo and self-hosted to name just a few sources.